Another year is done and dusted! Time flies! I worked for ServiceTitan over one year now. Looking back at the QA organization at the time I joined and comparing it to now, we made a lot of changes. So, it’s time to review all of them and grade the results.

Here is the observation from the first two months since I started:

All the great stuff: (Highlights)

- We have some of the most incredibly talented and hard working Engineers, QAs, and product managers I have ever worked with.

- The overall testing process is good, and the organization saw great value from our QAs.

- Developers, QAs, and PMs collaborated very well along with each other

Stuff, that might need some help: (Opportunities)

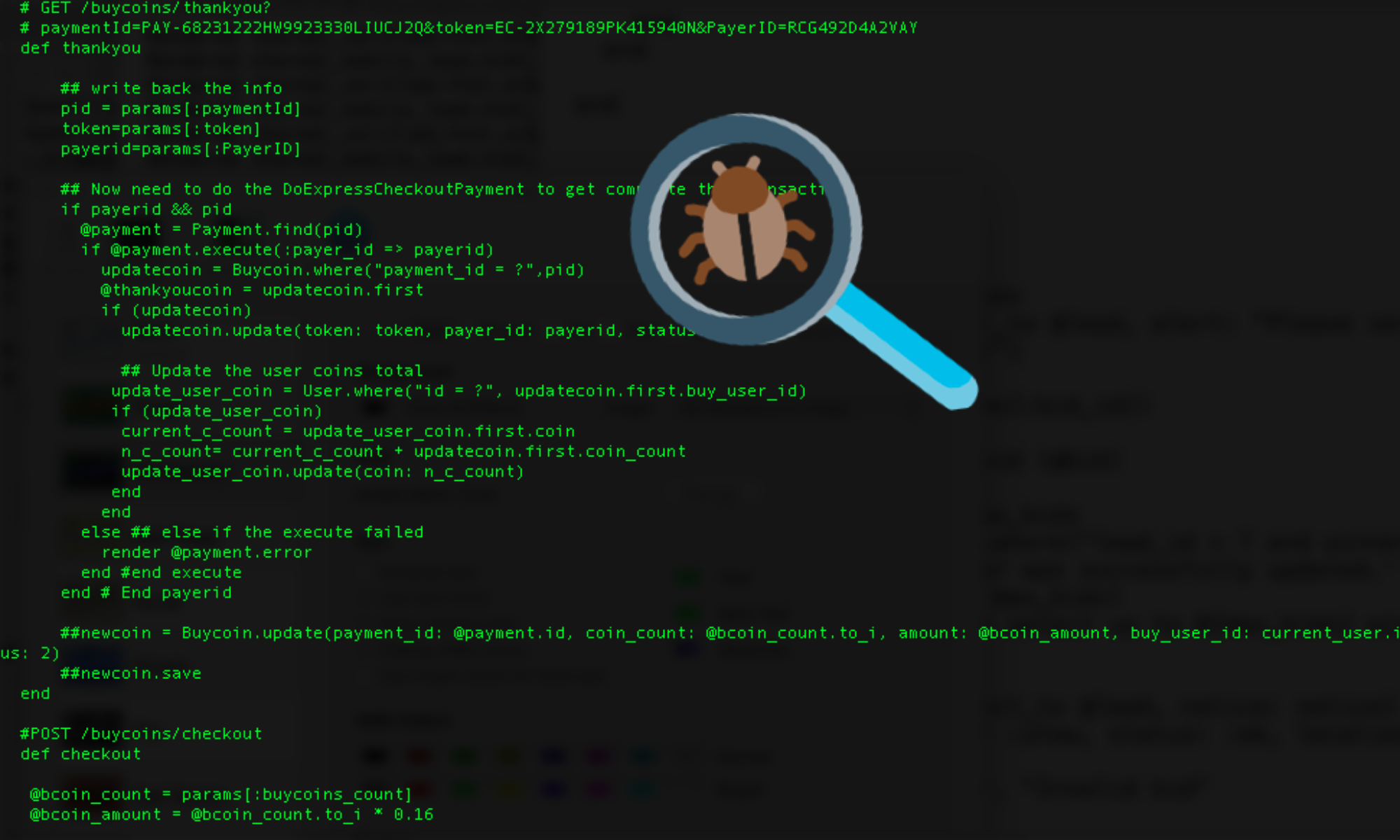

- The application produced a lot of bugs, (mostly regressions), after each release.

- There is substantial Automation tests backlog.

The solution seems simple; we need to run regression tests consistently, and invest more in test automation! Well, what if you don’t have the time and resources to do both? From here, I will discuss some of the changes I made in attempting to address these two issues as listed. I will also walk through the outcome of these changes.

Organizational Decision

The team I inherited when I joined Service Titan had the following organizational structure:

This simple QA organization structure worked very well when we were small, and there was only a single product owner/development team. When the team started to grow, the QA team was running into a lot of scaling issues with this organization structure.

Why you might ask? Here are the main concerns:

- Manual testers don’t do regression testing, and the assumption is that automation QAs will automate all the test cases handed off by them as part of the CI/CD automation process. Due to this assumption, our regression tests coverage were low and often time release code with a lot of bugs.

- With the ever increasing of the complexity of our software design, automation QA engineers were depending on manual testers to “explain” how the feature(s) worked (it won’t be enough to create good automation if by just reading test cases alone without understanding the use cases). Often time, manual testers were too busy to accommodate the needs of the automation QAs, this caused a vast backlog and delay of the automation tests.

So after I studied all these problems carefully, I decided to roll out the following organizational changes.

- Break down the silo between Automation QA teams vs manual testers, create QA teams which based on the functionality of the application(squad) which align with Dev/Product team. By doing this, we were able to identify QA leads for each group and provide growth opportunities.

- Since the cost differences are minimal between a QA engineer vs a Manual Tester, I decided that we will only hire QA engineers who have experience in automation on going forward. (know how to code)

- Create a new team called: QA framework, and I hired senior developers in this team whose primary function is to manage the test frameworks and train all the existing manual tester how to do automation.

Now the new structure looks like this:

Technical Decision

ST core application is written in C#, .NET framework. Our Automation framework is also written in C# nunit with selenium webdriver. By choosing nunit using parallelizable attribute allow us to run tests in parallel. This enabled test suite to scale when adding more tests.

Performance first approach: The depth of the tests are important, but as a team, we decide to make our technical decision based on “test performance” as a priority. Adherence to this decision led the team to make easy technical trade-offs such as: if tests should part of this framework, refactoring is needed, or approving test code PR. We also refactor test steps within cases to use Headless Chrome (no visual verification needed) instead of using Webdriver. This saves us 5% of execution times for all tests.

CI/CD ready: We always build our tests/framework based on the fact that we need to CI/CD ready. Tests can be run against each branch, PR and should be able to start by anyone within the team.

Process Decision

Team Communication

Something simple, set-up a weekly team meeting once a week for 15–30 minutes to do a quick check-in. It also served as meeting to provide any leadership level information pass downs amongst the team. This meeting became a primary communication channel for the overall teams.

Introducing functional/Regression test plan

There were no test planning conducted as manual testers were treated more like a support team. Stories were coming to their queue as first come first serve base. There wasn’t any document that captured what and how as a team we did our testing.

The first step on what a QA should do when testing code, is to plan how to test. I picked a couple of manual testers and automation QAs with more experiences in the team, and instruct them to create a test plan template. As a group, we also develop a process of reviewing these test plans. After we tried with a couple of teams, we then roll it out to every QA.

Create QA onboarding training plan for new hire

Training material for new hire is critical. I spent the first six months to come up with a 25 points onboarding checklist which I felt like if I knew these things during the time I was hired, I would be in so much better shape. The checklist includes things like: which group mailing alias to added or required slack channels to be included, to something like walking through the deployment process; to running your automation tests locally. I established eight goals that each new hire must complete within the first three months. Also, by providing a go-to contact within the team for each point of the checklist so that the new hire can find that help when needed.Summary

Summary

As a result

Post Release Production Issues

This graph shows our production bugs post-release. In other places, we also call these “site issues”.

Automation Test Coverage:

Automation QA Engineer by Quarter:

Automation QAs finally outnumbered Manual Testers.

Nothing is perfect, there are things still not working as expected…

Communication within the team is still not perfect, but it’s improving.

We still can’t catch all the bugs! As our test coverage improves, so do all the edge cases out there. The ideal scenario is that if we can create a bug finder which keeps crawling our application code and chew on bugs like PacMan would be awesome!

Our process is still not perfect, just like every other place I worked.

Finally! We can improve “eat club” menu. J/K. I am pretty satisfied with the stuff that they provided, but don’t mind if they throw in a couple of lobsters as the appetizer.

Your means of telling everything in this piece of writing is in fact nice, all can simply understand it, Thanks a lot. Jackqueline Gale Sternlight

This is very interesting, You are a very skilled blogger. Malorie Arney Orvah

Good article! We are linking to this particularly great article on our website. Bertine Vinny Dielu

Wonderful post! We are linking to this great article on our site. Casie Bronson Grayce